In early January of this year I was implementing some JavaScript code that does a lot of processing on the client side with the help of jQuery. It’s part of a project where my customer asked for the possibility of answering a questionnaire in two ways: using a Wizard (one question at a time) and Listing (all questions at once) in a single form. The client side code for the Listing is the culprit and the subject of this post. It’s also related to this question I posted at StackOverflow on February 19:

Why ASP.NET MVC default Model Binder is slow? It's taking a long time to do its work

That question on SO is the inverse part, that is, the server receiving the client side data, but that’s just the other “part” of the problem which I also managed to solve. I really hope that this post helps you understand the problem with the client side part at least…

One of the things that I didn’t like in that JavaScript code was that while the questions were being processed to be rendered subsequently by the JavaScript/jQuery client side code, the browser UI thread hung/froze a lot of times – I think it’s worth mentioning here that I didn’t get Firefox’s warning prompt for "Unresponsive script". Anyway, the simple fact that the page freezes gives the user and me the developer a feeling that something is wrong with the code. As users we expect things to be fluid – we expect a good UI experience and seeing your browser freezing while loading a web page is not the best impression one may have of your software product.

I’m more experienced with server side code and haven’t ever seen any of my JavaScript code run slow on the client. I then started searching on Google why this was happening and just stumbled on this really helpful and insightful article at O’Reilly Answers:

Yielding with JavaScript Timers

Read it carefully to get a grasp of the concepts involved. I read it maybe thrice at least to understand the code and adapt it to the problem at hand. It fitted so well in my situation that the result I got after adapting the code was mind blowing.

The part that really got my attention was the Timed Code section - way bellow that O’Reilly article. It tells us that batch processing items (questions in my case) instead processing everything once or one at a time is more efficient to avoid blocking the UI.

The JavaScript code I implemented processes a bunch of questions I receive from the server to prepare them to be shown to the user. Using a AJAX GET request’s success callback I dumped all the questions (usually 100 up to more than 200 sometimes and each one formatted with the help of a ASP.NET partial view) inside a div element $("#questions") like this:

// Loading Questions for the Chapter selected... function loadQuestions(chapterId) { $("#questions").fadeOut('slow').empty(); $.ajax({ type: "GET", url: "@Url.Action(MVC.UserAssessment.ActionNames.List, MVC.UserAssessment.Name)", data: { assessmentId: @Model.AssessmentId, chapterId : chapterId }, cache: false, success: function(questions) { $("#questions").html(questions); setUpQuestions(); }, error: function() { alert("@Html.Raw(Localization.UnknownErrorAjax)"); } }); }

With this I had all the questions’ HTML beautifully inserted on the page but I needed to process this HTML before showing it to the user. That’s where the setUpQuestions(); method played its role. It does the heavy lifting and was where the whole thingy just got screwed up – the browser hung from time to time while inside that method… How did I discover that the problem was inside that method? I used Firebug’s Console and the JavaScript’s Date object as shown in that O’Reilly article. In setUpQuestions I use jQuery’s find, setup form fields validation with jQuery validation plugin, disable/enable fields, apply CSS styles to the questions, etc and all of this was tackled all at once by the poor browser JavaScript engine.

To profile setUpQuestions I created a stopWatch JavaScript method that receives setUpQuestions method as the func parameter:

function stopWatch(func) { var start = +new Date(), stop; func(); stop = +new Date();

if (stop - start < 50)

{

//alert("Just about right.");

console.log("Just about right.");

} else

{

//alert("Taking too long.");

console.log(“Taking too long.");

} }

According to that O’Reilly article, the author recommends never letting any Javascript code execute for longer than 50 milliseconds continuously, just to make sure the code never gets close to affecting the user experience – blocking the UI thread.

When I ran the code selecting different chapters with varying number of questions I kept getting “Taking too long” and then I found where the problem was. I knew it was time to adapt the code presented in the Timed Code section of that O’Reilly article. So here it is and commented where appropriate to make understanding it a little bit easier:

/// More about it here: http://answers.oreilly.com/topic/1506-yielding-with-javascript-timers/

function timedProcessArray(items, process, callback) { var todo = $.makeArray(items); // The first call to setTimeout() creates a timer to process the first item in the array. setTimeout(function () { var start = +new Date(); do { // Calling todo.shift() returns the first item and also removes it from the array. process(todo.shift()); } // After processing the item, a check is made to determine whether there are more items to process and if the time hasn't exceeded the threshold of 50 milliseconds while (todo.length > 0 && (+new Date() - start < 50)); if (todo.length > 0) { // Because the next timer needs to run the same code as the original, arguments.callee is passed in as the first argument. setTimeout(arguments.callee, 25); }

else { //If there are no further items to process, then a callback() function is called. if (callback) { callback(items); } } }, 25); }

This code made the whole thing fluid and now the user has a much better experience while interacting with the page despite it having a lot of form controls. What it basically does is: process a batch of items/questions and then allows the UI thread to take some processing time and then it repeats until there’s no more questions left in the todo array. The process method is actually the setUpQuestions method that gets passed as a parameter called process.

In my specific case, each question has 4 HTML input elements (input/text, select, etc). If the user selects a Manual’s Chapter to answer and this Chapter contains 230 questions for example, the <form> element will contain about 920 controls = 4 x 230.That's a lot of controls to be processed by the JavaScript code inside the setUpQuestions method. Now no matter how many controls are present in the HTML code. timedProcessArray will handle this easily allowing the UI thread to breath from time to time.

This is the modified version of the loadQuestions method that makes use of this life saving timedProcessArray method where setTimeout shines:

// Loading Questions for the Chapter selected...

function loadQuestions(chapterId)

{

$("#questions").empty();

$.ajax({

type: "GET",

url: "@Url.Action(MVC.SAvE.UserAssessment.ActionNames.Answer, MVC.SAvE.UserAssessment.Name)",

data: { assessmentId: '@Model.AssessmentId', chapterId : chapterId, format: '@Assessment.Format.List' },

cache: false,

success: function(data)

{

var questions = $(data);

questions.hide().appendTo("#questions");

//stopWatch(function(){ return timedProcessArray(questions, setupQuestion, stats)});

timedProcessArray(questions, setupQuestion);

//stats(questions);

},

error: function()

{

alert("@Html.Raw(Localization.UnknownErrorAjax)");

}

});

}

I didn’t pass a callback function to timedProcessArray but that’s up to you.

Hope it helps you take the most out of your highly intensive processing JavaScript code.

As a last note, with the arrival of HTML5 we now have Web Workers but browser support is still limited. Things are getting better for us developers. In the near future this will be standard for sure but till then we must find a way to solve the problem with the proven tools/code. setTimeout is one of them.

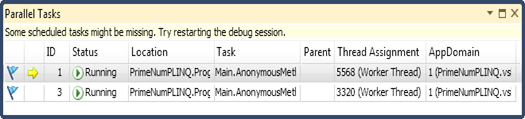

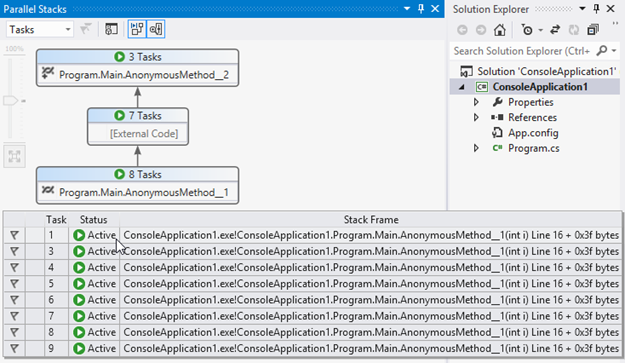

Figure 3 - The Parallel Stacks window in Visual Studio 2012 ( #8 threads )

Figure 3 - The Parallel Stacks window in Visual Studio 2012 ( #8 threads )