I decided to follow a different path to learn software development for the iPhone - instead of online tutorials and Apple docs I got a book. I postponed my desire to learn but it’s time to revive it. I grabbed a beginner’s book on the subject: A Beginner's Guide to iOS SDK Programming by James A. Brannan & Black Ward. This book covers iOS 4.2 + Xcode 4. iOS 5 is on the verge of being released…

I had to download the recent Xcode and its accompanying SDK bits again ( 3.17 GB ) as the ones I had installed were out of date (from September 2010)  . It was just a matter of hitting Mac App Store and looking for Xcode. The download has everything you need to install to be able to follow the book samples.

. It was just a matter of hitting Mac App Store and looking for Xcode. The download has everything you need to install to be able to follow the book samples.

As I started creating the sample projects in Xcode I thought it’d be an excellent opportunity to store these samples in an online repository ( repo ) with source code control for further reference and to share/allow the beginner developer to download and study all the samples. It’s also a good chance I have to play with Git since I’ve been using Subversion during the last years.

This post covers the basics to integrate Xcode with GitHub for the Mac OS user. GitHub is a web-based hosting service for software development projects that use the Git revision control system.

I try to synthetize lengthy and scattered docs you find on the subject and provide links to key docs and posts…

I learned how to use Xcode Organizer to integrate my online GitHub repository with Xcode built in support for software control management and started sending the projects to GitHub right after the second book sample. It’s better to start early or you’ll never do it!

This article in Mac OS Developer Library: Managing Versions of Your Project has everything you need to configure your project to use it with Git.

When you create an online/remote repository in GitHub you get instructions on how to set up the repo as getting a copy of the repo to work locally in your computer or sending an existing project to the remote repo. This help article from GitHub clarifies some things: Set up Git in Mac OS.

This post has the steps you have to follow to get a GitHub repo to work with Xcode projects: Version Control System with XCode 4 and Git Tutorial.

You’ll have to use Mac OS Terminal to type some commands. Nothing difficult.

As a matter of fact you should familiarize yourself with Terminal if you haven’t yet. The real fun is when you play with git commands in Terminal (take for example the powerful rebase command). Later in this post I’m going to use the support offered by Xcode which has UI for basic git commands as commit, push, pull, merge, etc - but Xcode doesn’t give you the power of the full set of git commands that are only available through the command line.

These are the Terminal commands I typed to send the book’s initial sample projects (QuickStart and C Main Project) to my remote repository located at GitHub: https://github.com/leniel/iPhone-Beginner-Guide

Take a special look at the highlighted commands:

Last login: Fri Aug 19 19:29:32 on ttys001

Leniel-Macaferis-Mac-mini:~ leniel$ cd /

Leniel-Macaferis-Mac-mini:/ leniel$ cd iPhone

Leniel-Macaferis-Mac-mini:iPhone leniel$ cd Local

Leniel-Macaferis-Mac-mini:Local leniel$ ls

C Main Project iPhone Beginner's Guide.xcworkspace

QuickStart

Leniel-Macaferis-Mac-mini:Local leniel$ cd QuickStart

Leniel-Macaferis-Mac-mini:QuickStart leniel$ ls

QuickStart QuickStart.xcodeproj

Leniel-Macaferis-Mac-mini:QuickStart leniel$ git remote add origin git@github.com:leniel/iPhone-Beginner-Guide.git

Leniel-Macaferis-Mac-mini:QuickStart leniel$ git push -u origin master

Counting objects: 16, done.

Delta compression using up to 2 threads.

Compressing objects: 100% (14/14), done.

Writing objects: 100% (16/16), 8.27 KiB, done.

Total 16 (delta 2), reused 0 (delta 0)

To git@github.com:leniel/iPhone-Beginner-Guide.git

* [new branch] master -> master

Branch master set up to track remote branch master from origin.

Leniel-Macaferis-Mac-mini:Local leniel$ cd "C Main Project"

Leniel-Macaferis-Mac-mini:C Main Project leniel$ ls

C Main Project C Main Project.xcodeproj

Leniel-Macaferis-Mac-mini:C Main Project leniel$ git push -u origin master

To git@github.com:leniel/iPhone-Beginner-Guide.git

! [rejected] master -> master (non-fast-forward)

error: failed to push some refs to 'git@github.com:leniel/iPhone-Beginner-Guide.git'

To prevent you from losing history, non-fast-forward updates were rejected.

Merge the remote changes (e.g. 'git pull') before pushing again. See the 'Note about fast-forwards' section of 'git push --help' for details.

Leniel-Macaferis-Mac-mini:C Main Project leniel$ git pull origin master

warning: no common commits

remote: Counting objects: 16, done.

remote: Compressing objects: 100% (12/12), done.

remote: Total 16 (delta 2), reused 16 (delta 2)

Unpacking objects: 100% (16/16), done.

From github.com:leniel/iPhone-Beginner-Guide

* branch master -> FETCH_HEAD

Merge made by recursive.

QuickStart.xcodeproj/project.pbxproj | 288 ++++++++++++++

QuickStart/QuickStart-Info.plist | 38 ++

QuickStart/QuickStart-Prefix.pch | 14 +

QuickStart/QuickStartAppDelegate.h | 19 +

QuickStart/QuickStartAppDelegate.m | 73 ++++

QuickStart/QuickStartViewController.h | 13 +

QuickStart/QuickStartViewController.m | 44 +++

QuickStart/en.lproj/InfoPlist.strings | 2 +

QuickStart/en.lproj/MainWindow.xib | 444 ++++++++++++++++++++++

QuickStart/en.lproj/QuickStartViewController.xib | 156 ++++++++

QuickStart/main.m | 17 +

11 files changed, 1108 insertions(+), 0 deletions(-)

create mode 100644 QuickStart.xcodeproj/project.pbxproj

create mode 100644 QuickStart/QuickStart-Info.plist

create mode 100644 QuickStart/QuickStart-Prefix.pch

create mode 100644 QuickStart/QuickStartAppDelegate.h

create mode 100644 QuickStart/QuickStartAppDelegate.m

create mode 100644 QuickStart/QuickStartViewController.h

create mode 100644 QuickStart/QuickStartViewController.m

create mode 100644 QuickStart/en.lproj/InfoPlist.strings

create mode 100644 QuickStart/en.lproj/MainWindow.xib

create mode 100644 QuickStart/en.lproj/QuickStartViewController.xib

create mode 100644 QuickStart/main.m

Leniel-Macaferis-Mac-mini:C Main Project leniel$ git push -u origin master

Counting objects: 14, done.

Delta compression using up to 2 threads.

Compressing objects: 100% (12/12), done.

Writing objects: 100% (13/13), 3.95 KiB, done.

Total 13 (delta 3), reused 0 (delta 0)

To git@github.com:leniel/iPhone-Beginner-Guide.git

05ec270..fe84a7a master -> master

Branch master set up to track remote branch master from origin.

Leniel-Macaferis-Mac-mini:C Main Project leniel$

Great! With the above commands I have sent both projects to my repo located at GitHub.

It’s important to note that for each project I created in Xcode I selected the option to create a local git repository as shown in Figure 1:

Figure 1 - Xcode - Selecting Create local git repository for this project

Figure 1 - Xcode - Selecting Create local git repository for this project

With this in place I can now safely delete my local copy of both projects (folder Local I used above in Terminal commands) and work directly with the code of my remote repository. Let’s do it:

Open Xcode Organizer selecting the menu Window => Organizer:

Figure 2 - Organizer window accessible through Xcode’s Window menu

Figure 2 - Organizer window accessible through Xcode’s Window menu

I suppose you have already configured and added (+ button in the bottom left of Figure 2) your GitHub repo (green circle in Figure 2) to the Organizer following the docs I linked above.

To get a local working copy of your remote repository you must click the Clone button (see bottom part of Figure 2) and choose a location to place the repo files. After doing this you’ll get a new repo (mine is located in the folder /iPhone/iPhone-Beginner-Guide as you see in Figure 2). When I click my local copy of the repo I get this beautiful screen where I can see commit comments and changes I made to each file along the way (click to enlarge):

Figure 3 - Local ( Clone ) copy of my online GitHub repository seen in Xcode Organizer

Figure 3 - Local ( Clone ) copy of my online GitHub repository seen in Xcode Organizer

Now it’s just a matter of working and modifying the project files or adding new projects and commit them to the repository through the menu File => Source Control => Commit…

One more important note is: when you commit something, it’s just committed in your local copy. You need one additional step: push the changes to GitHub. In Xcode you can select the file(s) or project(s) you want and go to File => Source Control => Push… For more on this, read: Commit Files to Add Them to a Repository.

In my case, when I select Push I get this Xcode dialog where I can select the Remote endpoint (GitHub repository) to which my committed files will go:

Figure 4 - Xcode Push user interface and GitHub remote location

Figure 4 - Xcode Push user interface and GitHub remote location

As a bonus I created a Workspace as seen in Figure 5 to have all the sample projects at hand in a single Xcode window. The workspace has references to the projects and work somewhat like Microsoft Visual Studio’s solution file if you’re used to Microsoft developer tools. The workspace helps a lot during the commit and push tasks!

Figure 5 - Xcode workspace with projects at left side pane

Figure 5 - Xcode workspace with projects at left side pane

Well, I’m new to this new Xcode world and I think I’ll learn a lot from these simple sample beginner projects.

The next thing I'm gonna do is learn what file types I can ignore when committing… Thanks to StackOverflow there’s already a question addressing this very topic: Git ignore file for Xcode projects

Edit: following the advice of the above StackOverflow question, I added a .gitignore file to the repo.

Hope this helps.

Figure 1 - Project’s hooks folder before the setup

Figure 1 - Project’s hooks folder before the setup Figure 2 - Project’s hooks folder after the setup

Figure 2 - Project’s hooks folder after the setup Figure 1 - Creating a new Last.fm Google Analytics Web Property

Figure 1 - Creating a new Last.fm Google Analytics Web Property Figure 2 - Filling Last.fm Web Property with the correct data

Figure 2 - Filling Last.fm Web Property with the correct data Figure 3 - Checking Web Property ID that’ll be used when configuring Last.fm profile

Figure 3 - Checking Web Property ID that’ll be used when configuring Last.fm profile Figure 4 - Edit button used to get access to Last.fm profile data

Figure 4 - Edit button used to get access to Last.fm profile data Figure 5 - Last.fm Tracking Code status

Figure 5 - Last.fm Tracking Code status

Figure 4 - Xcode Push user interface and GitHub remote location

Figure 4 - Xcode Push user interface and GitHub remote location

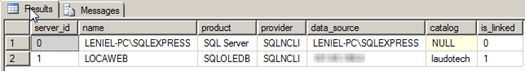

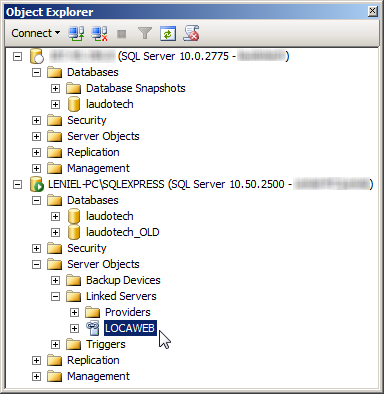

Figure 2 - SSMS Object Explorer and the Linked Server LOCAWEB in my local SQL Server Express instance

Figure 2 - SSMS Object Explorer and the Linked Server LOCAWEB in my local SQL Server Express instance