I’m going to share here a pretty nice deployment automation solution for when you need to have a defined set of folders in place when first deploying an app to IIS. On subsequent re-deployments the folder structure will be kept intact with any files users may have added to them.

Let’s work with a simple example: given an ASP.NET MVC app, we need a folder called Files and inside this folder there will be some pre-defined folders named: Folder 1, Folder 2 and Folder 3 with a child folder called Test. Something like this:

App root

|

---Files

|

---Folder 1

---Folder 2

---Folder 3

|

---Test

When deploying for the 1st time these folders are empty but the folder structure is mandatory let’s say because I’m using a file manager like elFinder.Net that expects that these folders exist on the server. Why? Because the ASP.NET MVC app has links pointing to these folders in some views. The folders should be ready to store files when the app is released. What also comes to my mind is the case where we need an existing Downloads/Uploads folder.

What’s more? We also want all this to happen while using Publish Web command from within Visual Studio and still keeping the option Remove additional files at destination checked:

Figure 1 - Visual Studio Publish Web with File Publish Options => Remove additional files at destination

Figure 1 - Visual Studio Publish Web with File Publish Options => Remove additional files at destination

This setting is nice because when you update jQuery NuGet package for example (jquery-2.1.1.js) it will send the new files to IIS server and will remove the old version (jquery-2.1.0.js) that exists there. This is really important so that the app keeps working as expected and don’t load the wrong version/duplicate files. If we don’t check that option we have to go to the server and delete the old files manually. What a cumbersome and error prone task!

What to do in this case where we want the deployment task do the work “automagically” for us with no human intervention? It’s seems like a lot of requirements and a task not so simple as “it appears to be”… Yep, it requires a little bit of MsDeploy codez.

Here’s what is working for me at the moment after finding some code pieces from here and there:

Given a publish profile named Local.pubxml that sits here:

C:\Company\Company.ProjectName\Company.ProjectName.Web\Properties\

PublishProfiles\Local.pubxml

Let’s add the code blocks necessary to make all the requirements come to life:

<?xml version="1.0" encoding="utf-8"?>

<!--

This file is used by the publish/package process of your Web project. You can customize the behavior of this process

by editing this MSBuild file. In order to learn more about this please visit http://go.microsoft.com/fwlink/?LinkID=208121.

-->

<Project ToolsVersion="4.0" xmlns="http://schemas.microsoft.com/developer/msbuild/2003">

<PropertyGroup>

<AfterAddIisSettingAndFileContentsToSourceManifest>AddCustomSkipRules</AfterAddIisSettingAndFileContentsToSourceManifest>

<WebPublishMethod>MSDeploy</WebPublishMethod>

<LastUsedBuildConfiguration>Local</LastUsedBuildConfiguration>

<LastUsedPlatform>Any CPU</LastUsedPlatform>

<SiteUrlToLaunchAfterPublish />

<ExcludeApp_Data>False</ExcludeApp_Data>

<MSDeployServiceURL>localhost</MSDeployServiceURL>

<DeployIisAppPath>SuperCoolAwesomeAppName</DeployIisAppPath>

<RemoteSitePhysicalPath />

<SkipExtraFilesOnServer>False</SkipExtraFilesOnServer>

<MSDeployPublishMethod>WMSVC</MSDeployPublishMethod>

<AllowUntrustedCertificate>True</AllowUntrustedCertificate>

<EnableMSDeployBackup>False</EnableMSDeployBackup>

<UserName />

<_SavePWD>False</_SavePWD>

<LaunchSiteAfterPublish>True</LaunchSiteAfterPublish>

</PropertyGroup>

<PropertyGroup>

<UseMsDeployExe>true</UseMsDeployExe>

</PropertyGroup>

<Target Name="CreateEmptyFolders">

<Message Text="Adding empty folders to Files" />

<MakeDir Directories="$(_MSDeployDirPath_FullPath)\Files\Folder 1" />

<MakeDir Directories="$(_MSDeployDirPath_FullPath)\Files\Folder 2" />

<MakeDir Directories="$(_MSDeployDirPath_FullPath)\Files\Folder 3\Test"/>

</Target>

<Target Name="AddCustomSkipRules" DependsOnTargets="CreateEmptyFolders">

<Message Text="Adding Custom Skip Rules" />

<ItemGroup> <MsDeploySkipRules Include="SkipFilesInFilesFolder">

<SkipAction>Delete</SkipAction>

<ObjectName>filePath</ObjectName>

<AbsolutePath>$(_DestinationContentPath)\\Files\\.*</AbsolutePath>

<Apply>Destination</Apply>

</MsDeploySkipRules>

<MsDeploySkipRules Include="SkipFoldersInFilesFolders">

<SkipAction></SkipAction>

<ObjectName>dirPath</ObjectName>

<AbsolutePath>$(_DestinationContentPath)\\Files\\.*\\*</AbsolutePath>

<Apply>Destination</Apply>

</MsDeploySkipRules>

</ItemGroup>

</Target>

</Project>

This is self explanatory. Pay attention to the highlighted parts as they are the glue that make all the requirements happen during the publish action.

What is going on?

The property

<AfterAddIisSettingAndFileContentsToSourceManifest>

AddCustomSkipRules

</AfterAddIisSettingAndFileContentsToSourceManifest>

calls the target

<Target Name="AddCustomSkipRules"

DependsOnTargets="CreateEmptyFolders">

that in turn depends on the other task

<Target Name="CreateEmptyFolders">

CreateEmptyFolders take care of adding/creating the folder structure on the server if it doen’t exist yet.

AddCustomSkipRules contains two <MsDeploySkipRules...>. One is to prevent deleting Files and the other prevents deleting the child folders.

Check the targets’ logic. They’re pretty easy to understand…

Note: make sure you don’t forget the

<UseMsDeployExe>true</UseMsDeployExe>

otherwise you may see this error during deployment:

Error 4 Web deployment task failed. (Unrecognized skip directive 'skipaction'. Must be one of the following: "objectName," "keyAttribute," "absolutePath," "xPath," "attributes.<name>.") 0 0 Company.ProjectName.Web

Simple as pie after we see it working. Isn’t it?

Hope it helps!

As an interesting point, see the command executed by msdeploy.exe that gets written to the output window in Visual Studio:

C:\Program Files (x86)\IIS\Microsoft Web Deploy V3\msdeploy.exe -source:manifest='C:\Company\Company.ProjectName\Company.ProjectName.Web\obj\

Local\Package\Company.ProjectName.Web.SourceManifest.xml' -dest:auto,IncludeAcls='False',AuthType='NTLM' -verb:sync -disableLink:AppPoolExtension -disableLink:ContentExtension -disableLink:CertificateExtension -

skip:skipaction='Delete',objectname='filePath',absolutepath='\\Files\\.*' -skip:objectname='dirPath',absolutepath='\\Files\\.*\\*' -setParamFile:"C:\Company\Company.ProjectName\Company.ProjectName.Web\obj\Local\ Package\Company.ProjectName.Web.Parameters.xml" -retryAttempts=2 -userAgent="VS12.0:PublishDialog:WTE2.3.50425.0"

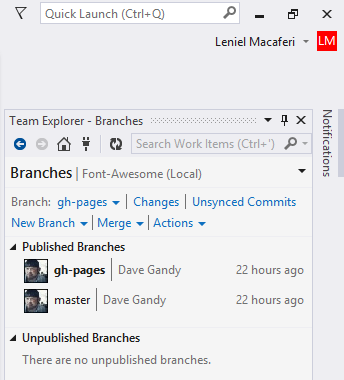

Figure 1 - Checkout remote branch (not master) named gh-pages to a local branch with upstream tracking

Figure 1 - Checkout remote branch (not master) named gh-pages to a local branch with upstream tracking Figure 2 - gh-pages branch appears in Published Branches in Visual Studio 2013 Team Explorer window

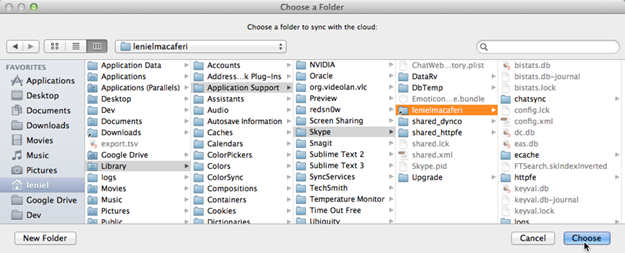

Figure 2 - gh-pages branch appears in Published Branches in Visual Studio 2013 Team Explorer window![Figure 3 - Creating a tracking branch with Git Gui with the same name [ 4.1.0-wip ] as defined in origin Figure 3 - Creating a tracking branch with Git Gui with the same name [ 4.1.0-wip ] as defined in origin](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhspYQINpBmzJ8Cpp2gOlGbf5Uigmsskxkm_VxZ5GC2_o8Tfx3PsKT7G4SjShRGGB_uZxpF_sYYPb5jNM-yBcKRXg7buEW0-doDiDGIpZQhwmowdSRyrXplJMl0AVE57hVgqXCpr9SS7m8/?imgmax=800) Figure 3 - Creating a tracking branch with Git Gui with the same name [ 4.1.0-wip ] as defined in origin

Figure 3 - Creating a tracking branch with Git Gui with the same name [ 4.1.0-wip ] as defined in origin

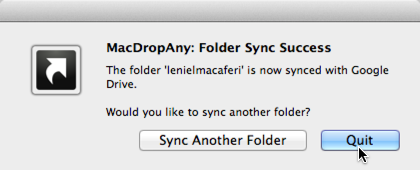

![MacDropAnyChooseCloudServiceProvider_thumb[1] MacDropAnyChooseCloudServiceProvider_thumb[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEj1BVYJWKtgYG80Vx4J2seBwI63sitksQ3BeQTHSOqIpZlQqtOLhg_gxNKZ9IiWadUGODIr8LuSgQazrhtDAtzrTdZjSWCKmzYsUAWlXpneRPvT0d2OFG6oh9eQ1qkwe3cOvV38jkS-TUo/?imgmax=800)